GPT‑5.2 Migration Playbook for AU: How to Move from GPT‑4/5/5.1 Safely

Table of Contents

-

2. Designing a Tiered GPT‑5.2 Architecture for Cost and Reliability

-

4. Testing, A/B Rollouts, and Regression Control in Production

Introduction: Why GPT‑5.2 Migration Matters for AU

GPT‑5.2 migration is about more than swapping out a model name in your code. For Australian organisations, it’s a chance to standardise on a safer, more capable AI stack while tightening compliance, cost control, and reliability, building on patterns from the complete GPT‑5 migration guides and OpenAI’s own latest GPT‑5.2 model documentation.

OpenAI positions GPT‑5.2 as an incremental evolution within the GPT‑5 family, offering similar context limits and capabilities to GPT‑5 and GPT‑5.1 while improving performance on safety and capability benchmarks. On paper, it shares the same 400,000 token context window and 128,000 max output tokens as GPT‑5 and GPT‑5.1, and OpenAI reports that variants like gpt‑5.2‑thinking and gpt‑5.2‑instant perform on par with or better than their 5.1 counterparts on safety evals, with comparable capability to gpt‑5.1‑codex‑max. In practice, that “drop‑in” promise only holds if you wrap it with the right governance, routing, and testing, following step‑by‑step upgrade patterns outlined in resources like GPT‑5.2 in ChatGPT and Microsoft’s Foundry implementation notes. Otherwise, small changes in behaviour can ripple through your customer support bots, internal copilots, and agentic workflows in ways that risk OAIC, ASIC, and APRA headaches.

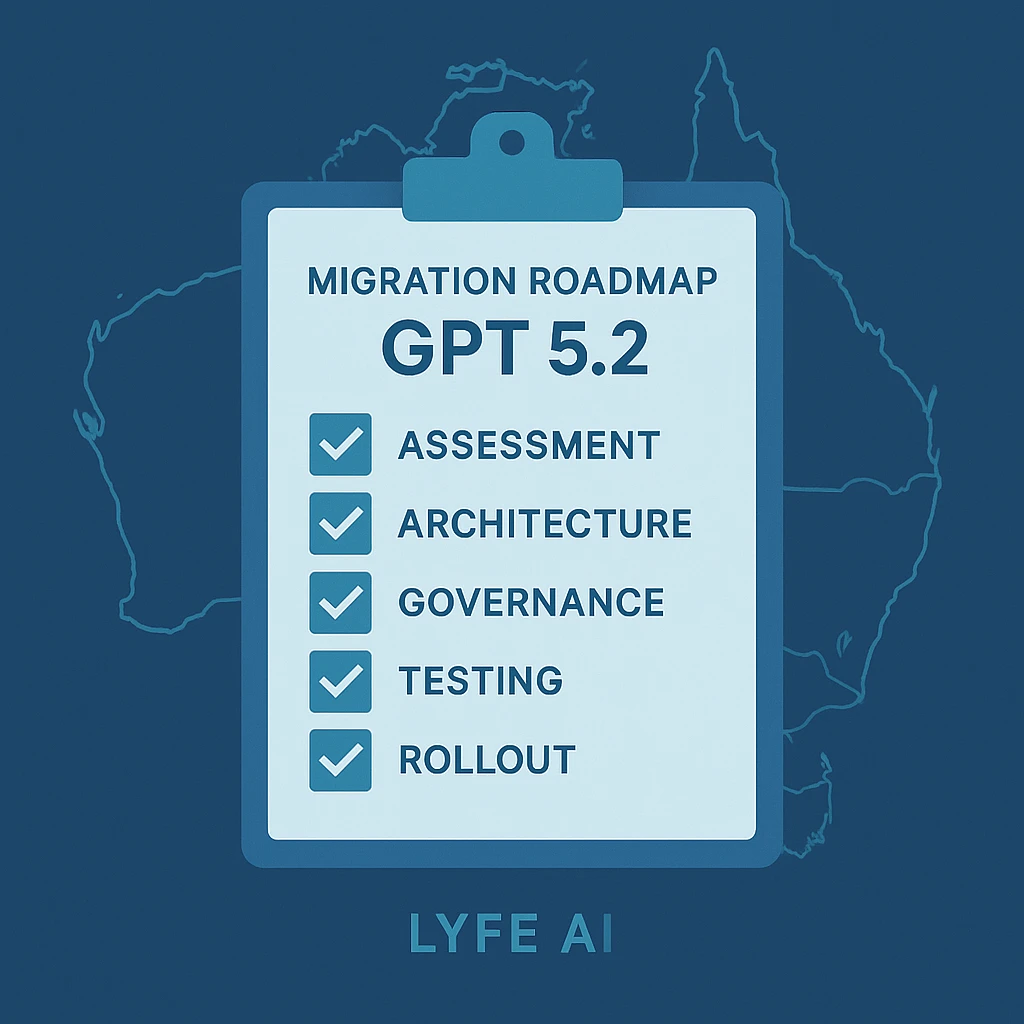

This playbook walks Australian teams through a structured migration from GPT‑4/5/5.1 to GPT‑5.2. You’ll see how to assess your current deployments, refactor to a multi‑tier GPT‑5.2 architecture, align with Australian privacy and regulatory expectations, and run staged rollouts with clear rollback paths, similar to the patterns covered in specialist GPT‑5 migration guides.

LYFE AI already helps local organisations design, deploy, and optimise AI solutions tuned for Australian conditions. Our managed AI services wrap advanced standard and custom models in observability, RAG governance, and risk controls, with all data encrypted, stored, and processed within Australia. From model customisation and integrations to continuous optimisation and Australian-based support, we deliver secure, compliant AI that aligns with local regulations and real-world operating needs. Our managed AI services wrap GPT‑5.2 in observability, RAG governance, and risk controls so you can move fast without playing compliance roulette, whether you’re deploying AI personal assistants, AI IT support for Australian SMBs, or secure AI transcription workflows.

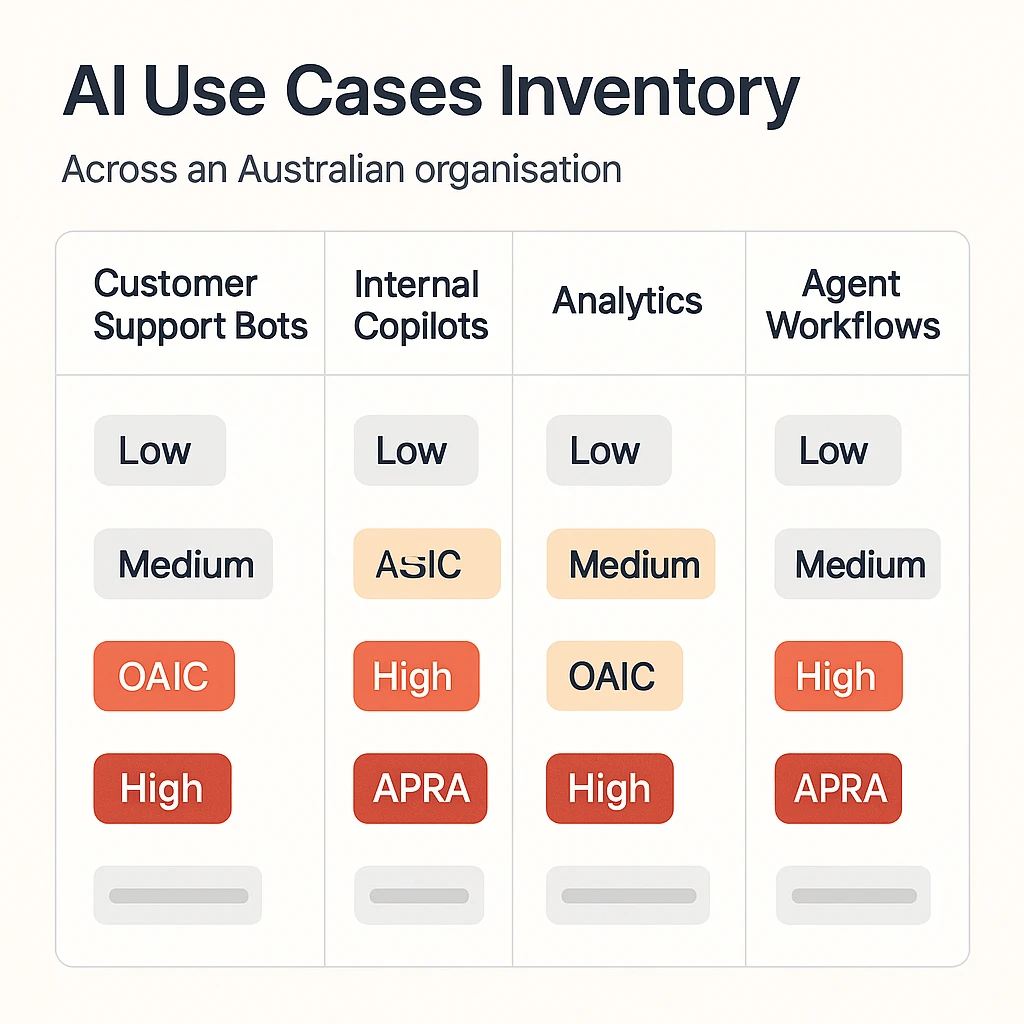

1. Assessing Your GPT‑4/5/5.1 Footprint and AU Risk Profile

Before touching a single prompt, you need a clear inventory of where GPT‑4/5/5.1 is running today. Many AU organisations discover that models are quietly embedded in half a dozen tools: a customer‑facing chatbot, a contact‑centre copilot, a policy Q&A bot for staff, some spreadsheet scripts, maybe an internal “labs” portal, or even consumer‑facing assistants similar to a secure Australian AI assistant.

Start with a simple but rigorous inventory exercise:

-

List every system, bot, or workflow using GPT‑4/5/5.1.

-

For each, capture: purpose (e.g., FAQ, advice, summarisation), audience (internal vs external), traffic volume, and data sensitivity.

-

Note all dependencies: RAG indexes, tools/APIs, downstream systems that consume model output (like CRMs expecting a certain JSON schema).

Next, classify each use case by AU‑specific risk:

-

High risk: anything touching financial advice, health, employment decisions, credit, or regulated complaints handling.

-

Medium risk: internal policy Q&A, HR guidance, or customer service triage where humans remain in the loop.

-

Low risk: marketing ideation, code suggestions, internal productivity tools for staff.

For each risk tier, document what a hallucination would actually mean in the Australian context. Could it breach Australian Consumer Law by misleading a customer? Could it misinterpret APRA standards or Fair Work obligations? This framing turns “model upgrade” into a controlled change rather than an experiment, echoing best‑practice upgrade runbooks in guides such as How to migrate from older GPT models to GPT‑5.1.

LYFE AI often wraps this into a light AI runbook for clients, aligned to OAIC and sector‑specific expectations. Our AI strategy consulting helps you map systems, risks, and stakeholders so migration doesn’t get blocked by risk committees at the eleventh hour, and sits alongside specialist offerings like Lyfe AI’s services catalogue for end‑to‑end delivery.

2. Designing a Tiered GPT‑5.2 Architecture for Cost and Reliability

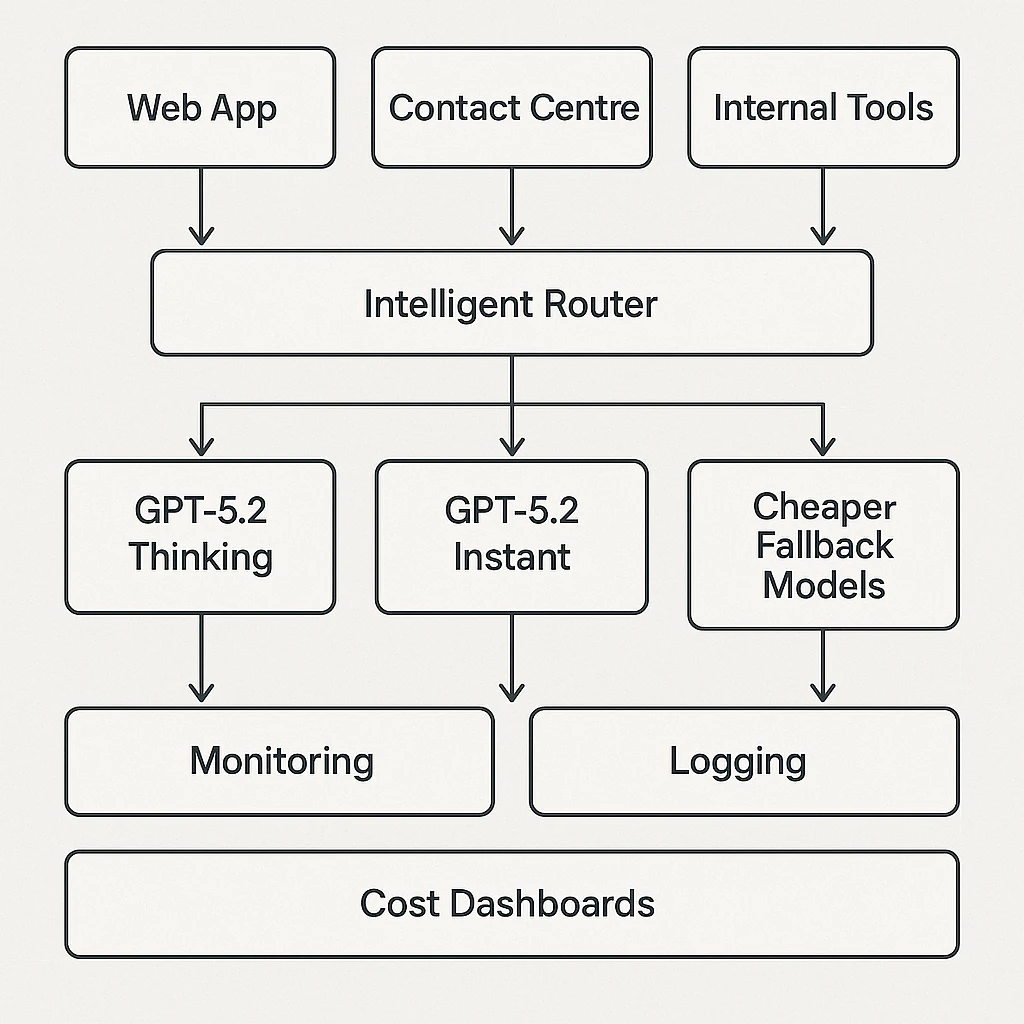

Moving to GPT‑5.2 is the perfect time to move off a single‑model architecture. Instead of sending every prompt to the biggest, most expensive model, AU organisations should adopt a three‑tier pattern, similar to modern OpenAI GPT‑5.2 deployment architectures:

-

Smaller / cheaper models for routing, classification, intent detection, and simple FAQs. These models are low‑cost and low‑risk, ideal for high‑volume front doors.

-

gpt‑5.2‑instant for most “balanced” workloads: customer service answers, internal copilots, document summarisation, and structured workflows that still require good reasoning but lower latency and cost.

-

gpt‑5.2‑thinking for complex or high‑risk flows: AU regulatory interpretation, financial comparisons, multi‑document case analysis, and agentic planning with tools.

This tiered model strategy aligns nicely with AU risk appetites and cloud budgets. High‑volume, low‑stakes flows can stay cheap and snappy. High‑stakes, lower‑volume queries get the “brains” and deeper reasoning they deserve, even if that means higher latency.

-

Low risk + low complexity → small model or gpt‑5.2‑instant with minimal reasoning.

-

Medium risk or medium complexity → gpt‑5.2‑instant with robust RAG and citations.

-

High risk + high complexity → gpt‑5.2‑thinking, RAG, verification, and mandatory human‑in‑the‑loop.

GPT‑5.2 also exposes controls for temperature, top‑p, and stepped reasoning effort (but only when the reasoning_effort is set to none). For “safe factual AU‑compliance” mode, use low temperature and deterministic decoding with concise outputs, paired with no reasoning effort setting to keep generations tight, predictable, and easy to audit. For “safe factual AU‑compliance” mode, use low temperature and deterministic decoding with concise outputs; for exploratory ideation, relax those constraints, following prompt design patterns similar to those covered in Mastering AI prompts best‑practice guides.

LYFE AI’s enterprise AI automation services implement this routing, along with cost telemetry and dashboards so you can actually see which flows are burning budget and adjust profiles in real time, whether they power customer‑facing AI partners or internal assistants.

3. AU‑Ready Governance, Privacy, and Hallucination Controls

The big fear when upgrading models is not “Will it be smarter?” but “Will it make things up in ways that get us sued?”. GPT‑5.2 takes a real leap forward in reasoning compared to earlier models, but even with sharper logic and fewer mistakes, it can still hallucinate. Benchmarks back this up: GPT‑5.2—especially the Thinking variant—sets a new bar on advanced reasoning tests, jumping from roughly 17.6% to about 53–54% on the ARC‑AGI‑2 abstract reasoning benchmark and posting higher scores on demanding scientific reasoning tasks like GPQA Diamond. Product reports and early integrations also show roughly 30% fewer errors versus previous versions. That’s meaningful progress, but it’s not magic; the model is more reliable, not infallible, and it still occasionally makes things up in ways you need to design around. They need to be engineered out through layered controls, as seen in enterprise‑grade GPT‑5.2 deployments like Foundry’s auditable GPT‑5.2 workflows.

A strong AU‑ready control stack has three layers:

3.1 Design‑time controls

-

RAG as the default for factual tasks: Ground GPT‑5.2 on curated AU‑specific corpora (Fair Work Act, APRA prudential standards, internal product terms, policies). Enforce “answer only from these documents or say ‘I don’t know’”.

-

Prompt patterns that forbid guessing: System instructions should explicitly require refusals when context is missing, mandate citations, and forbid invented laws, statistics, or URLs.

-

Conservative decoding for high‑risk domains: Low temperature, greedy or low top‑p decoding, and constrained output formats (JSON, fixed templates).

3.2 Run‑time safeguards

-

Guardrails and safety filters tuned for AU topics (gambling, domestic violence, credit hardship).

-

Observability: logging prompts, context, tool calls, outputs, and user feedback to spot drift and spikes in “I’m not sure” answers or complaints.

-

Verification passes that re‑check whether each claim is supported by retrieved documents before returning answers on high‑stakes flows.

3.3 Governance & documentation

Map all of this to AU frameworks: Privacy Act principles (data minimisation, purpose limitation), Australian Consumer Law, ASIC digital advice guidance, and industry codes. Keep a paper trail—risk assessments, evaluation results, monitoring dashboards—so you can show regulators how GPT‑5.2 is controlled, not just deployed, taking cues from structured migration practices such as the AI SDK 4.x to 5.0 migration guide.

LYFE AI’s AI governance and compliance practice helps design these layers for AU conditions, including access controls, logging patterns, and human‑in‑the‑loop tiers for health, financial, and public‑sector use cases, alongside domain‑specific solutions like AI transcription for clinician‑patient interactions.

4. Testing, A/B Rollouts, and Regression Control in Production

The fastest way to lose trust in AI internally is to break something that was already working. Newer models can improve headline benchmarks while still getting worse on your specific prompts, RAG setup, or tool sequences. That’s why migration to GPT‑5.2 needs a testing and rollout strategy that looks more like a cloud platform change than a casual feature tweak, following staged upgrade principles from resources like GPT‑5 step‑by‑step migration playbooks.

4.1 Build an AU‑focused regression suite

For each major use case, build a frozen test set of 100–1,000 prompts:

-

Real historical queries from your AU customers or staff.

-

Edge cases involving tricky regulations (GST edge cases, superannuation quirks, Fair Work interpretations).

-

Adversarial prompts trying to bypass disclaimers or evoke US‑centric content.

Capture “gold” expectations per test: acceptance criteria, required disclaimers, required tool‑use sequences, and refusal behaviour (“must say I don’t know if…”) so you can automatically compare GPT‑5.2 against GPT‑4/5.1.

4.2 Offline evaluation then shadow mode

Step through a simple path:

-

Run your regression suite against the current model (GPT‑4/5.1) to set a baseline.

-

Clone prompts, RAG configs, and tools to GPT‑5.2; run the same suite offline; measure accuracy, hallucination rate, cost, and latency deltas.

-

Tune prompts and parameters until GPT‑5.2 matches or beats the baseline on each risk tier.

Only then move to shadow mode: mirror real production traffic to GPT‑5.2 but keep its answers hidden. Use this to refine prompts further and catch odd regressions in tone or refusal patterns.

4.3 Canary rollouts with kill‑switches

When you’re ready to go live, don’t big‑bang. Use canary releases:

-

Start with 5–10% of traffic routed to GPT‑5.2.

-

Track CSAT, escalation rates, hallucination incidents, and costs versus control.

-

Ramp to 25%, 50%, then 100% only if metrics stay within agreed bounds.

Crucially, maintain a configuration‑level rollback: feature flags and routing controls that can send all traffic back to GPT‑4/5.1 within minutes, without re‑deploying code.

LYFE AI’s AI observability and testing stack plugs into your existing systems, giving product, risk, and engineering teams a shared dashboard for GPT‑5.2 rollouts across channels, whether they support AI‑augmented teaching workflows or broader enterprise services.

5. Region‑Aware Infrastructure and Data‑Residency Choices

Australian teams don’t operate in a vacuum. You have to navigate Azure region availability, network latency from AU, and internal rules about data residency and sovereignty. GPT‑5.2 and related frontier models won’t always land in Australia East on day one, so your migration plan needs a region‑aware design informed by reference deployments such as GPT‑5.2 in Microsoft Foundry.

5.1 Short‑term patterns when GPT‑5.2 isn’t in AU yet

In the short term, many organisations will:

-

Use OpenAI’s global API or non‑AU Azure regions for low‑sensitivity workloads (e.g., generic coding help, non‑identifiable FAQs).

-

Delay migration for sensitive flows (health, credit, public‑sector records) until AU‑hosted GPT‑5.2 is available, or

-

Adopt a hybrid pattern: perform local pre‑processing and anonymisation in AU, then send de‑identified content to GPT‑5.2 overseas.

This hybrid design needs clear documentation for privacy and risk teams: what fields get stripped or hashed, what logs are kept, and how long, and which traffic is blocked entirely from leaving AU.

5.2 Long‑term convergence to AU regions

As additional GPT‑5.2 capacity rolls out beyond its initial East US2 and Sweden Central (Global Standard) regions, your architecture should allow a clean repointing: configuration‑driven model endpoints, not hard‑coded URLs. Foundry’s model catalog already lets you access new models as they become available and switch between them with minimal friction, so the real constraint is how tightly you’ve coupled your app to a specific region or deployment. If you treat the model and region as config, not code, you’re ready to move quickly as more geographies—including potential Australia East/SE coverage—come online. That way, moving from a global endpoint to an AU region is a config change plus a regression run, not a rebuild, closely mirroring the endpoint‑switching patterns described in OpenAI’s model migration guidance.

For APRA‑regulated entities and public‑sector bodies, this shift to AU regions often unlocks more ambitious automation projects. You’ll still need guardrails and RAG governance, but latency drops and data‑residency worries ease. However, some experts argue that simply moving workloads into AU regions doesn’t automatically ‘unlock’ more ambitious automation for APRA‑regulated or public‑sector teams. They point out that APRA’s guidance and major Australian legal and consulting analyses focus far more on risk, controls, and accountability than on automation uplift, and don’t explicitly link regional hosting to bolder automation agendas. From this angle, AU regions are an important enabler on the compliance and latency front, but the real gating factors for automation remain governance, operational risk appetite, and change management rather than geography alone.

LYFE AI’s Azure OpenAI deployment services are designed around this region‑aware pattern, helping you plan short‑term and long‑term architectures that keep regulators and cloud‑cost dashboards happy while supporting use cases from secure AI transcription to SMB IT automation.

6. Practical Migration Checklist and Tips for AU Teams

To make all of this concrete, here’s a streamlined checklist you can work through with your product, engineering, and risk teams. Treat it like a runbook, not a philosophical essay, and cross‑reference it with detailed resources such as GPT‑5 feature and migration guides.

6.1 Assessment and design

-

Inventory all GPT‑4/5/5.1 use cases, grouped by risk level and business unit.

-

Identify which ones are good early candidates: internal tools, low‑risk FAQs, non‑sensitive data.

-

Define your three‑tier model strategy (small models, gpt‑5.2‑instant, gpt‑5.2‑thinking) and routing rules.

-

Agree with Risk/Legal on human‑in‑the‑loop policies and AU‑specific guardrails (e.g., no personalised financial advice).

6.2 Implementation

-

Clone existing prompts and RAG setups into GPT‑5.2 with minimal changes.

-

Introduce migration‑safe AU‑centric prompts: AU context, refusal behaviour, citations, and disclaimers baked in.

-

Instrument observability: per‑request telemetry for model version, tokens, tools, latency, and business context.

-

Set up cost dashboards by channel and use case so cost surprises don’t appear three months later.

6.3 Testing and rollout

-

Build regression suites for each major workflow, including AU‑specific tricky cases.

-

Run offline comparison (old vs GPT‑5.2), refine prompts, and fix tool schemas before going near customers.

-

Move to shadow mode on live traffic, then canary rollouts with clear rollback triggers.

-

Monitor escalation rates, hallucination incidents, tone issues, and cost per interaction for at least 4–8 weeks post‑migration.

6.4 Continuous improvement

-

Log and label every serious hallucination or tool‑calling error; use them to update RAG, prompts, and tests.

-

Review dashboards monthly with product, risk, and operations; adjust routing and reasoning profiles where needed.

-

Maintain a living migration runbook so each upgrade (5.2 → 5.3, or new provider) is smoother than the last.

If this all feels like a lot to juggle on top of your day job, that’s exactly why LYFE AI exists. Our managed AI platform bakes these patterns in—tiered routing, AU‑specific governance, observability, and rollback—so your team can focus on the actual outcomes you want from GPT‑5.2, not the plumbing, while still taking advantage of domain solutions like AI personal assistants and AI partner matching tools.

Conclusion: Standardising on GPT‑5.2 with LYFE AI

GPT‑5.2 gives Australian organisations a realistic path to “serious” AI automation: better reasoning, richer tool use, more stable behaviour. But the value only lands if you migrate in a structured way—inventorying current use cases, adopting a tiered model strategy, hardening RAG and prompts for AU conditions, and rolling out with proper testing and rollback, as echoed in comprehensive GPT‑5.2 migration guidance.

Whether you’re running a single customer support bot or a fleet of internal copilots and agentic workflows, now is the right moment to standardise on a GPT‑5.2‑based stack that your risk and compliance teams can live with. You don’t have to design that stack from scratch; you can draw on proven patterns from step‑by‑step GPT‑5 upgrade guides and enterprise migration case studies.

LYFE AI helps AU organisations plan, build, and operate GPT‑5.2 deployments end‑to‑end—from strategy and migration design through to governance, observability, and managed operations. If you’re ready to move off GPT‑4/5/5.1 without gambling on compliance or customer trust, book a chat with our team and we’ll turn this playbook into a concrete migration plan for your organisation, including adjacent capabilities like secure transcription and AI IT support.

© 2025 LYFE AI. All rights reserved.

Frequently Asked Questions

Is GPT-5.2 a drop-in replacement for GPT-4, GPT-5, and GPT-5.1 in existing applications?

For most use cases, GPT-5.2 is close to a drop-in replacement because it maintains similar context limits and API patterns as GPT-5 and GPT-5.1. However, you should still treat it as a controlled upgrade: review system prompts, safety filters, and key workflows, then run side-by-side tests before switching traffic fully.

What are the main benefits of migrating to GPT-5.2 for Australian organisations?

GPT-5.2 offers improved safety, reasoning, and reliability compared with GPT-4, GPT-5, and GPT-5.1, while keeping similar context and output token limits. For Australian teams, it’s also a chance to tighten data residency, align with local privacy obligations (like the Privacy Act and APPs), and rationalise multiple legacy models into a standardised, easier-to-manage stack.

How do I assess my current GPT-4 or GPT-5 usage before migrating to GPT-5.2?

Start by cataloguing every place GPT models are used: APIs, internal tools, customer-facing features, and automations. For each use case, document prompt patterns, safety requirements, SLAs, and cost profiles, then prioritise them into tiers (mission-critical, important, experimental) to guide a staged GPT-5.2 rollout.

How do I design a cost-effective GPT-5.2 architecture for production workloads?

Use a tiered architecture: reserve the more capable GPT-5.2 variants for high-value or high-risk tasks, and use cheaper instant or smaller models for routine or high-volume jobs. Combine this with request routing, caching, and sensible token limits so you can control spend while still taking advantage of GPT-5.2’s quality gains where they matter most.

What is the safest way to roll out GPT-5.2 to production users?

Begin with offline evaluation using historical data, then move to A/B tests where a small percentage of live traffic is served by GPT-5.2 while the rest uses your current model. Track regression metrics like accuracy, latency, escalation rate, and user satisfaction, and only increase GPT-5.2 traffic when it meets or exceeds your defined thresholds.

How should I handle hallucinations and safety risks when upgrading to GPT-5.2?

Even though GPT-5.2 improves safety, you should keep or add guardrails such as stricter system prompts, output validation, and content filters. For high-risk domains (finance, legal, health), use retrieval-augmented generation (RAG) with your own verified data and implement human review workflows for edge cases.

What do Australian teams need to know about GPT-5.2 data residency and compliance?

Australian organisations should confirm which OpenAI regions their traffic and logs are stored in, and configure region-aware routing where available to align with internal data residency policies. You should map GPT-5.2 use to Australian Privacy Principles (APPs), ensure no sensitive data is logged unnecessarily, and document these controls for audits and board reporting.

How does GPT-5.2 compare to GPT-5.1 for enterprise and AU government-style workloads?

GPT-5.2 generally matches GPT-5.1 on core capabilities and latency while improving on safety and reasoning benchmarks, making it better suited to regulated and public-sector style use cases. Because context windows and APIs remain similar, migrations from GPT-5.1 tend to be low-friction, focusing mainly on evaluation, governance checks, and rollout planning.

What practical steps should be on my GPT-5.2 migration checklist?

Your checklist should include: inventorying GPT usage, defining success metrics, updating prompts for GPT-5.2, setting up test suites, running offline and A/B tests, and preparing rollback plans. You should also review logging, monitoring, access controls, and documentation so teams know when and how to use GPT-5.2 safely.

How can LYFE AI help my Australian business migrate to GPT-5.2 safely?

LYFE AI offers a managed migration blueprint for Australian organisations, covering assessment of your current GPT-4/5/5.1 footprint, architecture design, and rollout strategy. They also help implement governance, data residency controls, test harnesses, and ongoing monitoring so you can standardise on GPT-5.2 without disrupting critical operations.