Prompt Engineering Assistants: From Fundamentals to Enterprise-Grade Practice

Table of Contents

-

Introduction: Why Prompt Engineering Now Matters Strategically

-

Prompt Engineering vs Fine-Tuning vs RAG: Strategic Comparison

Introduction: Why Prompt Engineering Now Matters Strategically

Prompt engineering has moved from a hacker trick to a strategic lever. As models like Claude, Gemini, Grok and GPT‑5 become core to content, coding, analysis and customer service, prompts shape time, cost and risk across the business.

For Australian leaders, the question is “How do we use AI assistants in a way that delivers measurable ROI, fits local regulation, and scales beyond a few power users?” Prompt engineering is the light‑weight way to steer general models toward your goals without heavy data science projects.

This first part of LYFE AI’s three‑part series focuses on the economic and strategic impact of prompt engineering. We compare prompting with fine‑tuning and retrieval‑augmented generation (RAG), look at model costs and token efficiency, and outline how to govern prompts at scale in an Australian regulatory context. Parts 2 and 3 will go deeper into structures and optimisation; this article is about the business case: when prompts alone are enough, when you need more infrastructure, and how to manage that portfolio like any other critical capability.

https://openai.com, https://anthropic.com

Business and Everyday Impact & ROI of Better Prompts

Better prompts reduce waste. Structured prompts can meaningfully cut drafting and reporting time by reducing clarification and rework, often delivering double‑digit efficiency gains. In practice, teams see fewer iteration cycles, simpler workflows, and higher overall efficiency when they standardize how they brief AI tools. If a policy team spends three days shaping a regulatory summary and a well‑designed assistant helps them get it done in closer to two, that’s a full day of capacity unlocked without extra headcount.t helps them consistently deliver in about two, you’ve effectively gained a full day of capacity without adding headcount.vity gains: some knowledge workers report 30–80% faster drafting with AI tools, MIT Sloan finds performance boosts of nearly 40% for skilled workers, and GitHub Copilot users complete coding tasks over 50% faster. All of these rely on clear, structured instructions to the assistant. At the same time, not every team sees the same lift—one Danish study found no overall reduction in hours worked, and about a quarter of users actually spent longer on tasks when using AI. So while the direction of impact is clear—good prompts save time—the exact percentage will depend on context and maturity. If a policy team spends three days shaping a regulatory summary and a well‑designed assistant reliably cuts that to two, you’ve effectively gained a full day of capacity without extra headcount.

The same applies at home or in small businesses. A vague request like “write a blog about tax” often needs several correction cycles. A clearer prompt that sets audience, length, tone and jurisdiction can reach a usable answer in one or two turns, reducing minutes per task and frustration for non‑technical users.

Each request consumes tokens; every failed attempt or retry adds to the bill. By defining goals, constraints and edge cases upfront, you reduce retries and cut token usage. In agentic workflows – where assistants call tools or orchestrate tasks – ambiguous prompts can trigger slow, expensive failure loops. Clearer instructions shrink those loops and stabilise outcomes so teams trust the system.

Explicit safety and compliance language inside prompts (“Avoid disclosing personal data; follow AU privacy rules; highlight uncertainty”) can lower the chance of inappropriate or privacy‑sensitive outputs. Under frameworks like Australia’s Privacy Act 1988 and OAIC guidance, this is not a silver bullet, but it is a fast, low‑cost defence layer you control without waiting for model vendors to change defaults.

From a CFO’s perspective, these effects roll up into reduced labour hours, reduced compute spend, and reduced exposure to compliance incidents, achieved through workflow changes rather than scarce AI engineering talent. Many organisations now treat prompt engineering as a capability and weave practical resources on mastering AI prompts into training and governance.

https://www.ibm.com, https://www.oaic.gov.au

Business and Everyday Value: From Toy to Reliable Tool

Prompt engineering turns a general assistant from a novelty into a dependable tool. In chat‑based models, context shapes reasoning; the model reacts to the instructions, examples and constraints you provide. Without deliberate prompting, it behaves like a gifted but unfocused intern; with it, you get something closer to a specialist who understands the brief.

In everyday life, that means asking for “a 5‑day vegetarian meal plan for a family of four in Sydney, using Coles or Woolies ingredients, under $200, with prep under 30 minutes” instead of simply “a meal plan”. This detail lets the assistant weigh budget and prep time, making the answer usable. Likewise, a travel prompt that includes airport codes, dates, budget and accessibility needs will usually require far fewer follow‑ups.

For AI companions and coaching assistants, persona‑based prompting (“behave like a laid‑back yoga teacher who explains things in plain English and never shames the user”) influences tone and structure. This improves engagement and alignment with expectations, especially when paired with a dedicated AI personal assistant configured for everyday Australian life.

In business, structured prompts compress workflows. A consultant can feed bullet‑point notes, a preferred template and style instructions to the model instead of drafting from scratch. It generates a first draft in minutes; the consultant reviews and refines. Across a year, that compounds into more billable work or room for deeper analysis, particularly when embedded into broader AI services and workflow solutions.

Organisations can track prompt‑level metrics: accuracy against a gold‑standard answer, latency to a usable result, cost per task in tokens or API dollars, and consistency across users. Some teams use A/B testing tools and workflow analytics to compare prompt versions, retiring weaker ones and promoting strong performers into central libraries. As practices mature, prompt engineering becomes evidence‑driven design, aligning with guidance such as MIT’s recommendations on effective prompts and OpenAI’s prompt engineering best practices.

https://wandb.ai, https://weightsandbiases.com

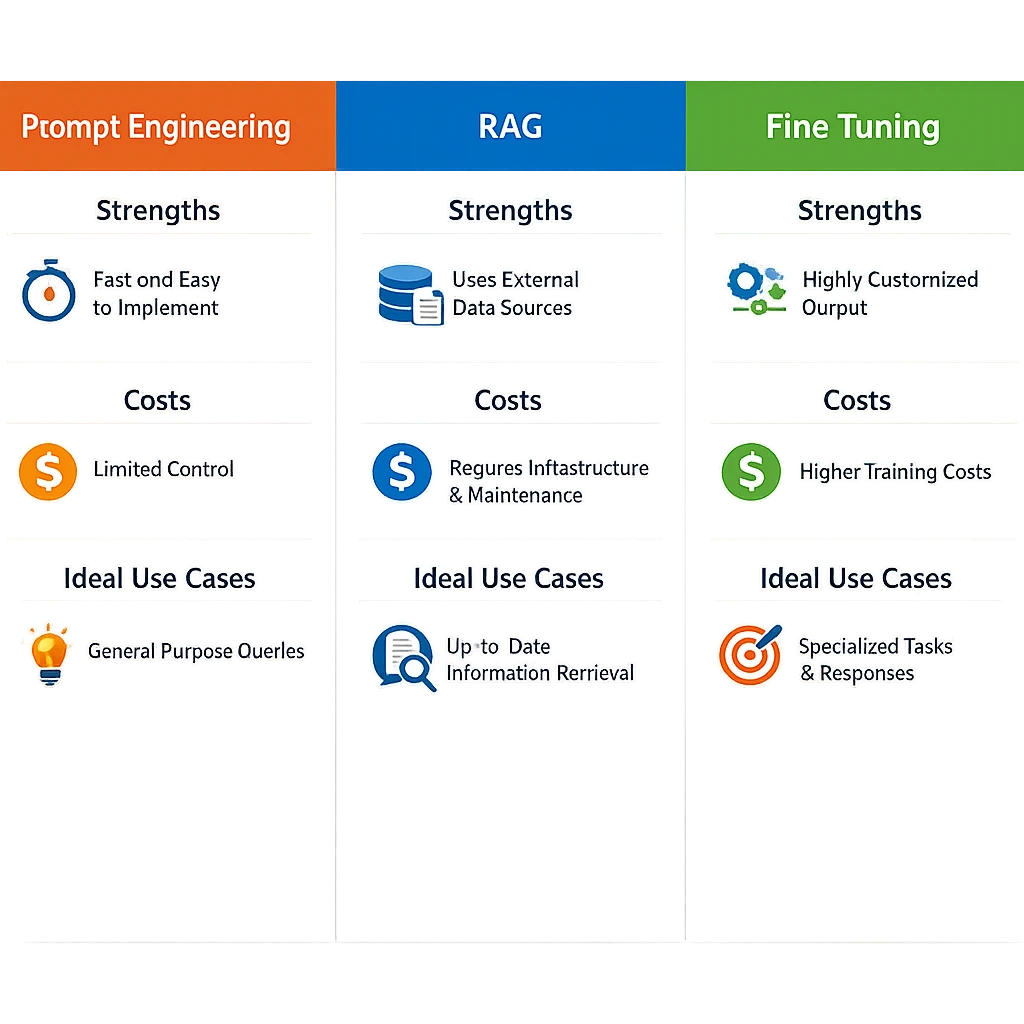

Prompt Engineering vs Fine-Tuning vs RAG: Strategic Comparison

Decision‑makers often ask: “Should we keep improving prompts, or do we need fine‑tuning or RAG as well?” Each technique occupies a different place in your AI strategy, with its own economics.

Prompt engineering is often “fine‑tuning lite”. With no extra training, smart prompting can often recover a large share of the performance you’d expect from fine‑tuning on tasks like classification, triage or standardised summarisation, in some cases approaching expert‑level results. For example, GPT‑4–based ChatGPT and untrained doctors showed very similar agreement levels on medical triage (κ≈0.67 vs κ≈0.68), both outperforming GPT‑3.5, and a raw GPT‑4 model using only prompts (no additional training) performed comparably (κ≈0.65). A hypothetical best‑case integration of these systems reached κ≈0.84, suggesting that untuned, well‑prompted LLMs can capture a substantial portion of the performance you might otherwise expect from task‑specific fine‑tuning.the possible gains just through good prompt design. You won’t always match a well‑trained, task‑specific model, but smart prompting, clear instructions and structured outputs can deliver strong, production‑ready performance for many everyday workflows without the cost and complexity of a full fine‑tuning pipeline.ication, triage or standardised summarisation—but the payoff varies a lot by task and domain, and isn’t guaranteed. In some setups, especially with strong few‑shot prompts, performance can come very close to a fully fine‑tuned model, and there are even cases where few‑shot prompting slightly outperforms fine‑tuning. But for more specialised or complex domains—think legal, medical, or bespoke internal taxonomies—the gap between prompting alone and a well fine‑tuned model can be large. In practice, prompt engineering can get you surprisingly far, yet whether it replaces or just complements fine‑tuning depends heavily on your specific use case and accuracy requirements. It is model‑agnostic, cheap to iterate, and usually the right place to start, especially when you lack large, clean datasets.

Fine‑tuning becomes attractive when you need very fixed, brand‑aligned behaviour or want to leverage proprietary labelled data at scale. It typically requires at least a thousand good examples and significant compute. For many organisations, the real break‑even point isn’t a single number like ‘ten thousand examples’, but a function of data volume, model performance gains, and total implementation cost. At smaller scales, the extra engineering work, evaluation burden, and privacy/compliance overheads can still outweigh the upside compared with smarter prompting, system‑prompt design, and using stronger base models out of the box.t, the engineering lift, data preparation, and privacy overheads often make it less attractive than pushing harder on prompting, retrieval, or stronger base models.s simply investing in better prompting on strong base models. This isn’t a hard rule so much as a practical guideline: the exact threshold depends on factors like task complexity, data quality, compliance requirements (especially around privacy), and the fine‑tuning method you use. Newer, more efficient approaches such as parameter‑efficient fine‑tuning can shift that break‑even point, but the core trade‑off—upfront fine‑tuning cost and risk versus prompt‑engineering agility—remains the same. Above that, fine‑tuning can lower per‑task costs and boost reliability for narrow tasks like claims triage or document classification.

Retrieval‑augmented generation (RAG) sits in a different lane. Instead of changing model weights, you give the model live access to your content via search or a vector database, then prompt it to answer using that material. This is powerful for knowledge‑heavy tasks – policy portals, product knowledge bases, legal archives – but demands infrastructure, data pipelines and maintenance. It also doesn’t remove the need for good prompts: the assistant still needs instructions on how to use retrieved documents, how to cite sources, and when to escalate.

Strategically, prompting sits at the “low cost, fast iteration” end; RAG at “medium cost, high knowledge coverage”; and fine‑tuning at “higher up‑front cost, strong repeatability for narrow tasks”. In many Australian organisations, the best path is sequential: start with robust prompt design, add RAG for document‑heavy workflows, and only then consider fine‑tuning where data volume and regulatory comfort justify it, ideally supported by an AI IT support partner for Australian SMBs.

https://arxiv.org, https://research.ibm.com

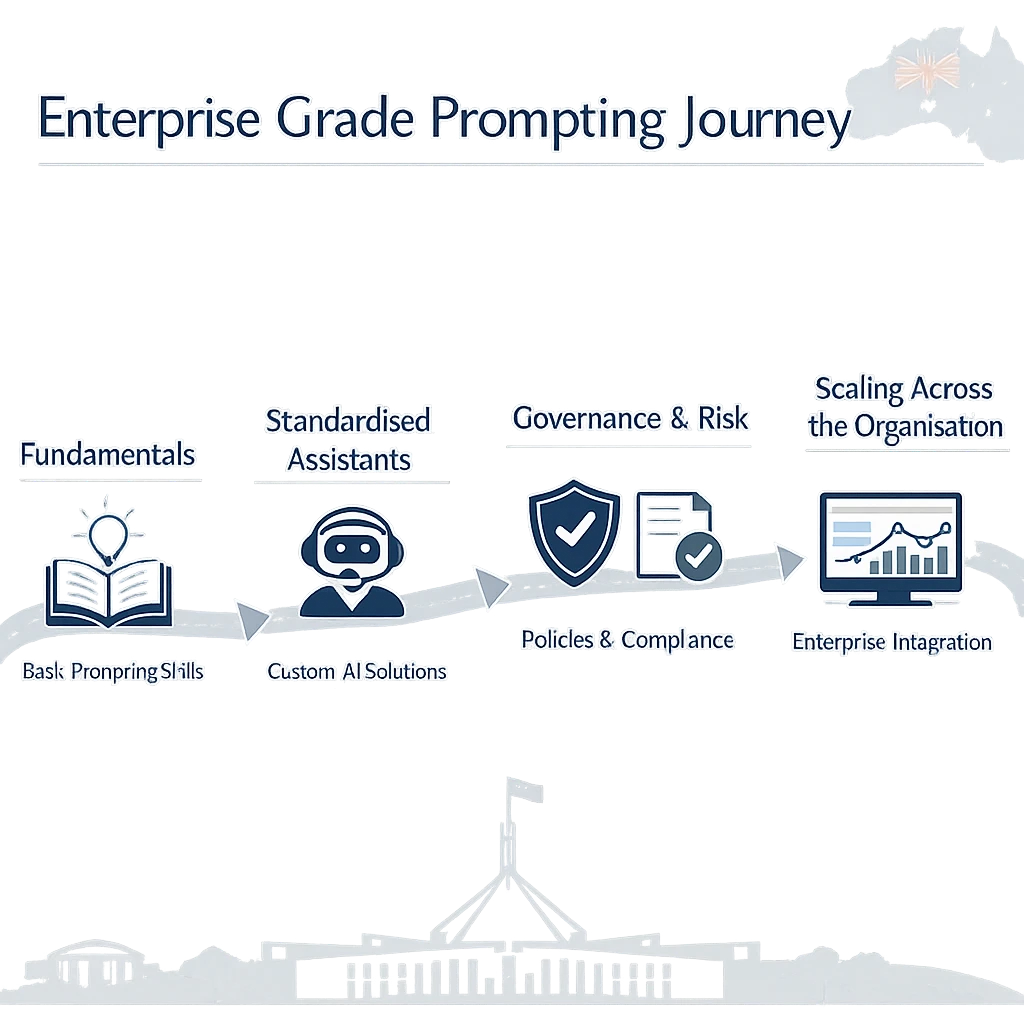

Enterprise-Grade Prompting: ROI, Scaling, and Governance

Once a few teams prove the value of prompt engineering, the challenge shifts from isolated wins to enterprise scale. Prompts stop being personal notes and become product assets: they need ownership, version control, approvals and metrics, much like software code.

Organisations build simple models for tracking value. A bank might measure average time to produce a customer‑facing letter before and after an internal assistant, finding a 40% reduction via chained prompts that collect context, draft text and self‑review. Support teams can monitor resolution rates and handle times when agents use prompt‑driven tools. These KPIs help prioritise which prompts to improve and which initiatives to scale.

Governance means treating prompts as part of your control surface. Enterprises can maintain central libraries where reviewed, approved patterns live. Changes are tracked in Git or similar systems, sometimes behind feature‑flag platforms like LaunchDarkly so new versions can be rolled out, rolled back or A/B tested. This prevents “configuration drift”, where different teams quietly fork prompts and you lose oversight, and aligns with widely cited prompt‑engineering governance practices.

Compliance and safety constraints can be baked into prompts as non‑negotiable prefaces, especially for customer‑facing workflows. A default instruction like “Follow AU Privacy Act principles, anonymise personal data, do not invent legal advice, and flag any apparent bias in the source material” can be attached to prompts across HR, legal or risk. This does not replace safeguards like access control, logging and human review, but reduces behavioural variance between users.

For non‑technical teams, visual builders and no‑code tools can expose prompt templates as simple forms: fields for “tone”, “audience”, “jurisdiction”, “escalation threshold”. Behind the scenes, these values slot into more complex system prompts and chains. Success can be measured with pre‑/post‑accuracy scores, satisfaction ratings and workflow analytics. Over time, the organisation builds a living library of prompts and patterns that embody domain knowledge much the way internal playbooks or SOPs once did.

https://launchdarkly.com, https://github.com

Model-Specific Prompting and Token Cost Optimisation

Not all models behave the same way, and prompt engineering needs to reflect that. Larger models with longer context windows can absorb more background material and instructions but cost more per token. Smaller or distilled models are cheaper but may need more explicit guidance, stepwise structure and guardrails to reach acceptable quality. Choosing the right mix becomes a budgeting and architecture decision.

Token efficiency is critical. Long prompts with repeated instructions burn input tokens; overly verbose outputs burn output tokens. Careful prompt design trims unnecessary text, uses compact but precise wording, and encourages concise answers unless detail is needed. This can shave a meaningful percentage off recurring API spend across a large user base, particularly when you pair model choice with operational guides such as the complete guide to GPT‑5 and other next‑generation assistants.

Different vendors and models handle prompts differently. Some weigh system‑level instructions heavily; others respond strongly to examples or structural cues like headings or bullet lists. Enterprises often run small evaluations: take a handful of critical tasks, design a few prompt variants, and test them across candidate models. Measure quality, latency and cost, then standardise on the best combination per use case.

In more advanced setups, you may mix models: a smaller, cheaper assistant handles routing, classification or triage using tight prompts, while a larger model is reserved for complex drafting or reasoning. Prompt engineering is the glue across this stack, defining when to escalate, what context to pass along, and how to express constraints consistently so users experience a single coherent assistant.

As context windows expand in next‑generation models, more of what previously required fine‑tuning or RAG may be achievable with prompt‑and‑context alone, especially for medium‑sized organisations. That does not remove the need for data infrastructure, but it shifts some investment from heavy training runs toward designing and governing rich prompt structures that exploit longer windows intelligently, drawing on playbooks such as IBM’s 2026 prompt‑engineering guide and Cisco Outshift’s work on advanced AI assistants.

https://platform.openai.com/pricing, https://anthropic.com/pricing

Australian Regulatory Context and Risk Management

For Australian organisations, prompt engineering sits inside a specific legal frame. The Privacy Act 1988, OAIC guidance, and sector‑specific rules in finance, health and government all shape what is acceptable when using AI assistants with real customer or citizen data. Even if model providers host data offshore, local entities remain accountable for how personal information is used and protected.

Prompts should be designed with data minimisation in mind. A compliance‑aware assistant should not ask staff to paste full Medicare numbers or detailed health notes unless necessary, and then only inside approved systems with proper logging. Prompts can encourage pseudonymisation (“use generic labels like Customer A, B, C”) and steer assistants toward policy‑level guidance rather than judgement on specific individuals, unless those workflows have been explicitly risk‑assessed.

There is growing attention on fairness and transparency. Assistants that support lending decisions, hiring or eligibility assessments should include instructions to explain reasoning in plain language, avoid protected‑attribute‑based recommendations, and flag situations where training data might be biased. These constraints will not fix systemic bias alone, but they create a documented expectation of behaviour that can be audited and iterated.

Many Australian enterprises are experimenting with internal AI use policies that explicitly mention prompts: where they may be used, how to write them safely, and which templates are approved for sensitive cases. Training staff to recognise risky prompts – asking for legal decisions, sharing identifiable customer details, or bypassing controls – is part cultural change, part skills uplift, and is often delivered alongside broader AI governance and enablement services tailored to local regulations.

A well‑governed prompt library can speed compliance reviews. Instead of assessing every AI interaction ad‑hoc, risk and legal teams review standard prompts and templates, embedding required language and escalation paths. Users then assemble workflows from pre‑vetted building blocks, staying inside a safer, more predictable operating envelope while still benefiting from AI‑driven efficiency.

https://www.legislation.gov.au/Series/C2004A03712, https://www.oaic.gov.au/privacy/guidance-and-advice

Practical Applications and Tips for Decision-Makers

Start with a short list of high‑leverage workflows and treat them as pilot projects for prompt‑driven transformation. Good candidates include report drafting, customer email responses, internal knowledge search, and basic data analysis for finance or operations, often delivered through a secure Australian AI assistant that meets local data‑handling expectations.

For each workflow, map the current steps: what inputs humans receive, what outputs they produce, and how long it takes. Then design prompts that mirror this structure – taking in the same inputs, generating the same outputs, and respecting existing approvals. Run side‑by‑side tests for a few weeks. Track turnaround time, quality (via human rating), and any incidents or near‑misses. This evidence base helps separate hype from real value and makes budget conversations easier.

Encourage teams to treat prompts as shared assets, not personal secrets. Set up an internal repository where people contribute successful patterns, annotate them with use cases and caveats, and rate performance. Over time, a few “gold standard” prompts will emerge. Those can then be refactored into formal templates, wired into internal tools, or wrapped with user interfaces that make them hard to misuse.

Invest modestly in literacy. Short training sessions on how assistants work, why prompts matter, and what “good” looks like will often produce outsized returns. When staff understand that specificity saves time, that constraints reduce risk, and that prompts can be improved like any other process, they begin experimenting in more disciplined ways, especially when guided by resources like the Prompt Engineering Playbook for programmers and industry best‑practice checklists.

Put a light governance wrapper around this early. Define who owns the prompt library, how changes are approved, and how performance is monitored. Align prompts with Australian privacy and sector rules from the outset, so you are not retro‑fitting controls after organic growth. With these foundations, you can move from scattered experiments to a coherent ecosystem of prompt‑engineered assistants that shift productivity and risk in your favour, whether you are deploying AI transcription tools in clinical settings, rolling out secure AI transcription pipelines, or helping staff become AI‑confident teachers and trainers.

https://www.ibm.com, https://www.oaic.gov.au

Conclusion and Next Steps

Prompt engineering is a strategic capability that shapes the economics, risk profile and competitiveness of modern organisations. By deliberately designing how assistants are instructed, you can unlock faster workflows, lower compute spend, and safer behaviour – often without heavy infrastructure or complex training.

For Australian leaders, the opportunity is to move first on governance and scale: build prompt libraries, align them with local regulation, and experiment with a clear ROI lens. In the next parts of this series, we will dig into concrete prompt design patterns and optimisation techniques; for now, the key step is to treat prompts as first‑class assets and start measuring their impact. If you are ready to map out where prompt‑engineered assistants fit in your organisation, assemble a small cross‑functional group, choose a few pilot workflows, and begin – particularly once you find the right AI implementation partner and start applying well‑researched prompt‑engineering techniques in practice.

https://www.oaic.gov.au, https://arxiv.org

To continue the journey, explore the next instalments in this LYFE AI series on prompt engineering, where we move from strategy into hands‑on design and optimisation for your assistants.

Frequently Asked Questions

What is prompt engineering and why does it matter for businesses?

Prompt engineering is the practice of designing, structuring and iterating the instructions you give AI assistants so they consistently produce useful, accurate outputs. It matters for businesses because better prompts directly reduce rework, speed up tasks, lower AI usage costs, and reduce risks like hallucinations or non‑compliant answers.

How can prompt engineering improve ROI from AI assistants in my company?

Good prompt engineering can cut the time staff spend fixing AI outputs, make self‑service workflows more reliable, and reduce the number of tokens (and therefore dollars) you spend per task. When prompts are standardised and embedded into tools and templates, you get repeatable outcomes, which translates into measurable productivity gains, better customer experiences, and clearer cost per use.

When is prompt engineering enough versus when do I need fine-tuning or RAG?

Prompt engineering is usually enough when you’re dealing with general reasoning, content drafting, or tasks that rely on public or lightly customised information. You typically need fine‑tuning or retrieval‑augmented generation (RAG) when the model must reliably use your proprietary data, match strict domain language, or comply with complex policies where “just prompting” fails consistently in testing.

What is the difference between prompt engineering, fine-tuning and RAG?

Prompt engineering shapes the model’s behaviour using instructions and examples at request time, without changing the model itself. Fine‑tuning modifies the model weights using your labelled data so it learns your style or domain, while RAG connects the model to external knowledge sources (like your document store) so it can retrieve and ground its answers in up‑to‑date, organisation‑specific information.

How do I scale prompt engineering across an enterprise without chaos?

To scale prompt engineering, you need shared prompt libraries, version control, and clear ownership for critical prompts used in customer‑facing or regulated workflows. Leading teams treat prompts like software assets: they document them, test them across edge cases, monitor performance, and apply approval and change‑management processes rather than letting every team improvise in isolation.

How does LYFE AI help organisations build enterprise-grade prompt engineering practices?

LYFE AI works with organisations to design prompt frameworks, governance models and assistant workflows tailored to their business and regulatory environment. This includes identifying high‑ROI use cases, building reusable prompt templates, optimising prompts for specific models and costs, and setting up monitoring and guardrails so AI assistants perform reliably at scale.

How can I optimise prompt engineering for model costs and token efficiency?

Cost‑aware prompt engineering focuses on being as short as possible while still being unambiguous, reusing shared system prompts, and minimising unnecessary context. By understanding how different models price input and output tokens, you can choose cheaper models for simple tasks, structure prompts to reduce long histories, and use retrieval or summaries instead of pasting whole documents.

What should Australian organisations consider about regulation and risk when using AI assistants?

Australian organisations need to align AI use with privacy law, sector‑specific regulations, and emerging AI governance expectations, particularly around data residency, human oversight and transparency. That means designing prompts that avoid eliciting sensitive data, documenting how AI is used in decision‑making, and putting review checkpoints around high‑impact use cases like credit, employment, or health decisions.

What are some practical prompt engineering tips for non-technical decision-makers?

Start by clearly stating the role of the AI (e.g. “You are a compliance analyst for an Australian bank”), the task, the constraints, and what a good answer looks like. Then iterate on prompts using real examples from your workflows, compare outputs from different structures, and gradually turn the best versions into standard templates your teams can reuse.

How can I move from experimenting with AI ‘toys’ to reliable business tools?

Move from ad‑hoc chats to defined use cases, documented prompts, and simple success metrics like time saved, error rate, or customer satisfaction impact. Partnering with specialists like LYFE AI can help you audit current experiments, design production‑ready assistants with governance built in, and ensure your AI usage aligns with both business strategy and Australian regulatory expectations.